Meta Platforms: Market Overestimates AI Costs, Underestimates Benefits

| 6 min readMeta Platforms (META) is positioned to significantly benefit from recent advances in AI technology. The company is a pure play AI inference investment (vs. model training) in terms of revenue generation, as well as cost and Capex.

Recent developments, particularly the publication of the DeepSeek-R1 model , indicate that the costs associated with AI inference can be substantially reduced. The market's response to initiatives like the massive Stargate investment program led by OpenAI suggests that many investors do not recognize the potential for significant decreases in AI inference costs. This makes META’s share price undervalued.

Commercializing AI Inference

Unlike some competitors, META does not derive meaningful revenue from AI training or AI infrastructure marketed to third parties. This makes it a pure play AI inference investment case.

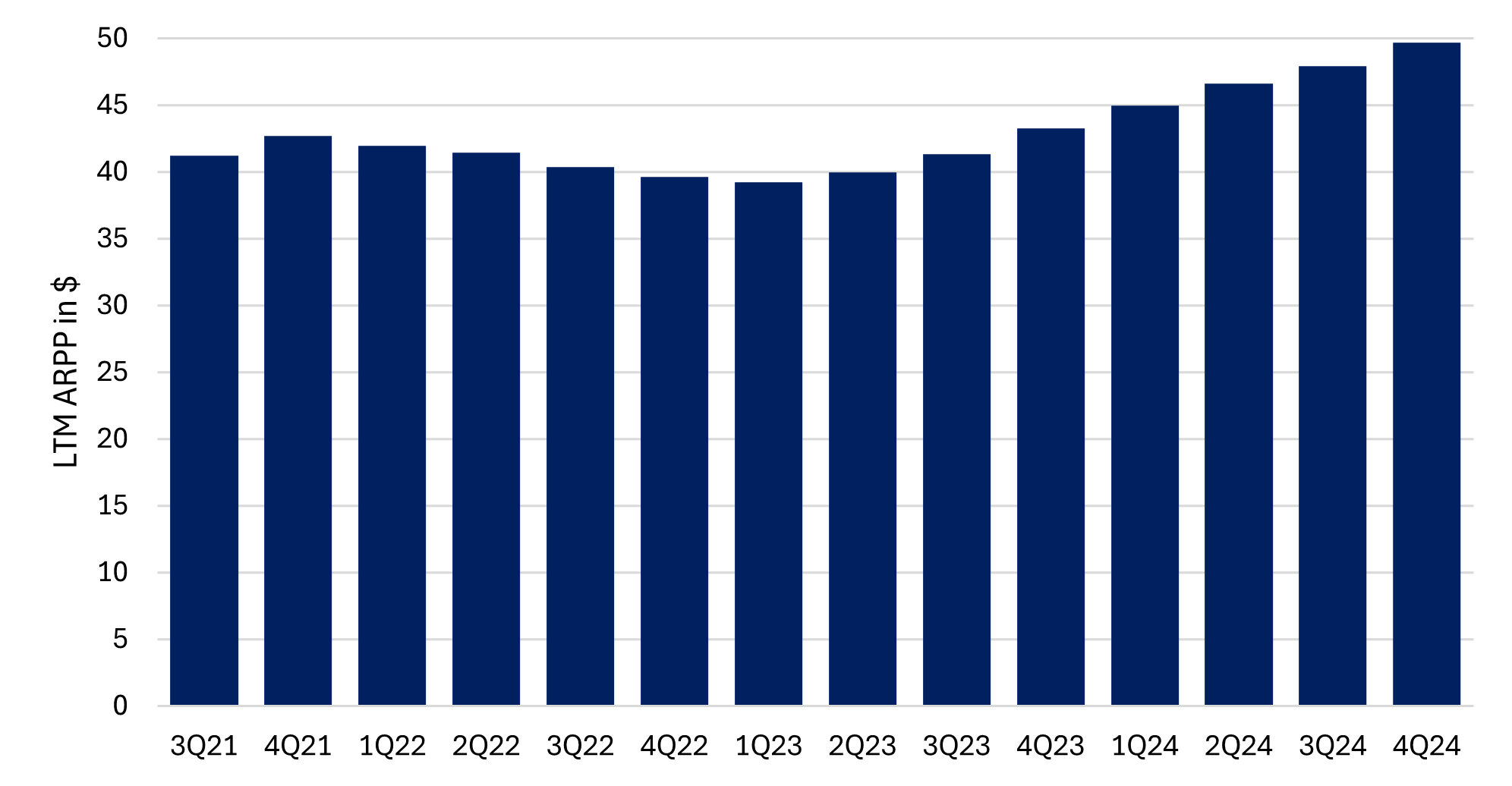

META commercializes AI inference by delivering more effective and targeted advertisements, which enhances the value of its advertising inventory. In its previous quarter's earnings call, the company reported that current models have improved ad conversion rates by 2-4%. Consequently, the Average Revenue Per Person (ARPP) has risen by approximately 15% over the last year to around $48 per Daily Active User (DAU) on an annualized basis.

As the quality of AI models continues to improve, it is reasonable to expect that the company can sustain a similar increase in ARPPU over the next few years. If we assume a constant DAU—a conservative estimate given that META has consistently increased DAU each quarter for at least the past five years—this could lead to a projected revenue of around $185 billion for FY25, up from the guidance of approximately $161-164 billion for FY24 . This revenue aligns with market expectations, which range from $180 billion to $195 billion.

Surprises in revenue are more likely to be on the upside due to advancements in AI inference, which should further enhance the value of the company’s ad inventory and, thus, ARPP.

Cost Structure Aligned to AI Inference

META’s major catalyst is the market's realization that the Capex and Opex linked to AI inference are significantly lower than anticipated.

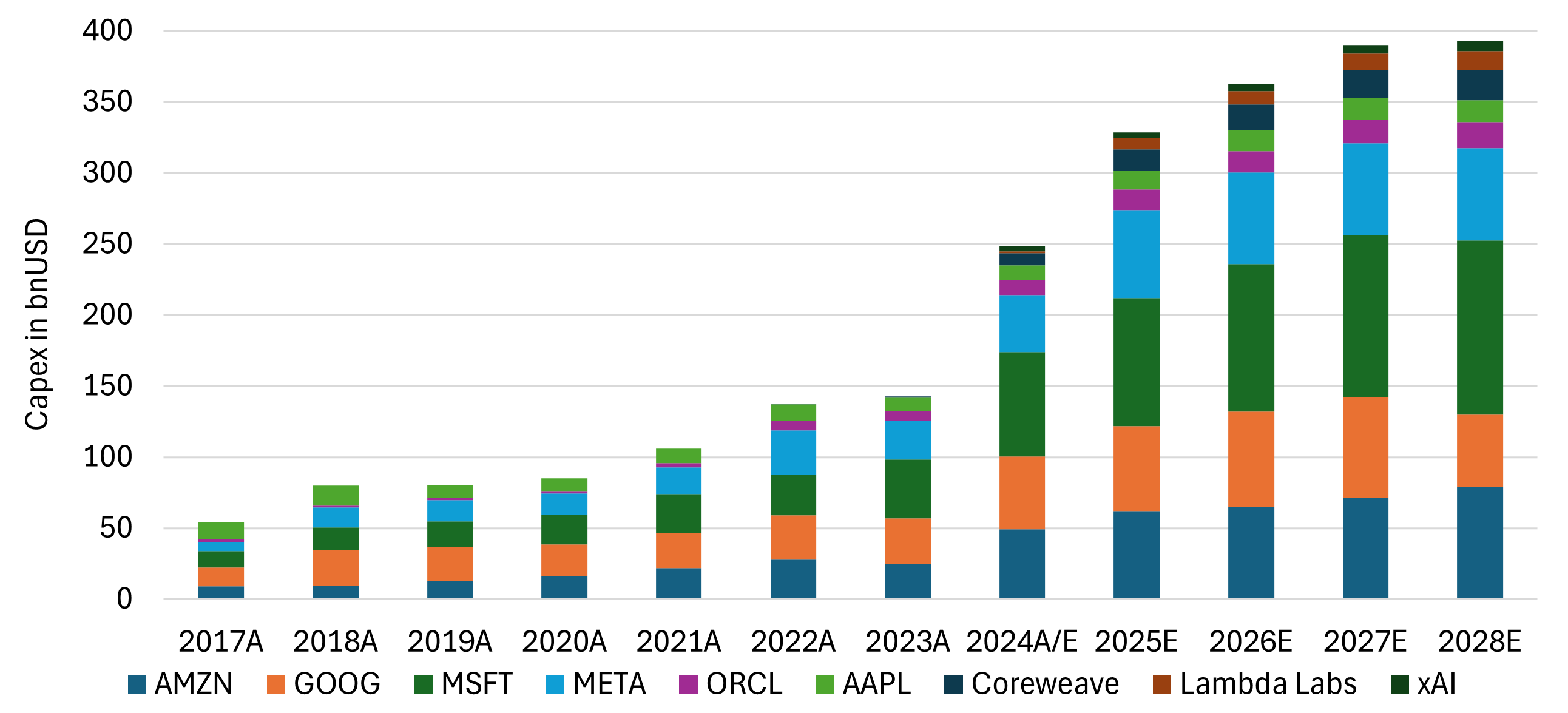

The rise of AI has generally been associated with substantial Capex among major technology providers. For instance, Microsoft (MSFT) has Capex of about $80 billion per year, primarily driven by spending on AI infrastructure. META itself has increased Capex from approximately $20 billion in FY21 to $38-40 billion in FY24. The recently announced Stargate project, with an estimated cost of $500 billion and an initial investment of $100 billion, reinforces the market's perception that more AI requires more Capex.

However, there are two important points where the market view may be challenged: First, as mentioned earlier, META's exposure mainly lies in AI inference rather than model training. Although the company has developed its own models, such as Llama , and is reportedly in the process of training version 4, it emphasizes that its model development is focused on its specific inference needs. When asked why the Llama models were open-sourced, CEO Mark Zuckerberg highlighted the desire to learn from external use cases to lower inference costs .

The company has further disclosed that an overwhelming majority of its GPU hardware is used for inference rather than model training , given further assurance that the company sees AI exclusively as an inference play.

Second, recent advances in technology suggest that the costs of inference (both Opex and Capex) are likely to decrease considerably. DeepSeek recently published its DeepSeek-R1 model which performs comparably to OpenAI’s latest model but is available at a cost that is 96% lower ($26.25 for OpenAI versus $0.96 for DeepSeek-R1 per million tokens).

From all indications, OpenAI is offering its own models at most at break-even to its opex. The company reportedly has losses of $5bn in FY24 (on $3.7bn of revenue), and CEO Sam Altman publicly acknowledged that the company is losing money on its “pro” level subscription. That’s further supported by the apparent need to invest $500bn over the next few years into project “Stargate” to support model training and inference.

Currently, there is no indication that DeepSeek is subsidizing its prices below opex, as the company does not appear to have external investments. This suggests that DeepSeek has a commercial incentive to at least recover its opex. Even if DeepSeek is also operating at break-even, the difference in operating costs compared to OpenAI is substantial. Such a significant difference is only plausible if DeepSeek has achieved breakthroughs that allow it to conduct inference at a considerably lower cost.

Market Rerating Potential

If transferable to other AI models, any such breakthrough would be hugely beneficial to META’s AI cost and capex requirements. Currently, META is guiding FY24 Capex of $38-40bn and FY24 cost of revenue (which includes the cost for the electricity, etc. for running its servers) of ~$30bn.

Prior to the emergence of modern generation AI models, the company had Capex per ARPP of $6.5-8.0 and cost of revenue of ~$8.20, which suggest FY24 ex-AI Capex of $22-26bn and ex-AI revenue of $26bn (calculated at 3.29bn DAP). Thus, we can pinpoint AI-related Capex at $12-18bn and cost of revenue of $4bn.

While it’s unlikely to see a similar 96% reduction in AI-related Capex and cost as can be inferred from DeepSeek, even just a 20% reduction would lead to an increase of $3-4bn of free cashflow per year just by reducing the cost of inference.

The market realizing that OpEx and CapEx may be considerably lower for AI Inference than currently thought may lead to a rerating of META, which also features one of the cleanest and clearest ways to profit from any further advances of AI.

Final Thoughts

Meta’s AI strategy—focusing on inference over training—positions it as a highly efficient, AI-driven ad revenue giant. Investors should consider Meta’s AI efficiency, revenue growth potential, and cost-saving strategies when evaluating its long-term prospects. Given its robust AI-driven advertising model, cost-effective inference capabilities, and continued innovation in digital engagement, Meta remains a compelling investment opportunity in the evolving AI-driven economy.

Check out the Marvin Labs App for more AI-driven insights on Meta Platforms and companies.

Alex is the co-founder and CEO of Marvin Labs. Prior to that, he spent five years in credit structuring and investments at Credit Suisse. He also spent six years as co-founder and CTO at TNX Logistics, which exited via a trade sale. In addition, Alex spent three years in special-situation investments at SIG-i Capital.

Image Attribution: The header image for this article is licensed from Mariia Shalabaieva on Unsplash and lightly edited by Marvin Labs with AI.